Thank you for the helpful tips and resources, I’ll try them out right away! As for the prompts, I tried to follow the examples in the demo videos, which wasn’t easy because most of them were cut off.

I also realised later that Cursor IDE and Opus 4.5 had been used. As I don’t have a premium Cursor subscription, I could only set it to ‘auto’. However, I didn’t get a single result. Although the Penpot MCP server was running and connected, and I could see that the MCP server and tools were recognised correctly, the LLM still couldn’t access them. To resolve the issue, the model generated complete JavaScript code, which I then had to enter manually via the Penpot API REPL. I tried this, but it didn’t work.

That’s why I’m currently working with VSCode, where I’ve tried various models such as Codex, GPT-5.2 and Opus 4.5. Unfortunately, I haven’t obtained any usable results yet. I also realised that I was being far too general. My goals are to recreate a finished HTML/CSS page in Penpot and to generate design variations exclusively using components from the library.

So far, I have had the following results: (1) Designs are not created at all, or are only partially created and not in a usable state, (2) components from the library are not used or (3) elements are cut off.

I also made attempts without being too specific, for example, I prompted to create ‘just’ a button. The BookNook website has been the most successful so far, but I’m no longer sure about the model or prompt used, the model could have been GPT-5.2.

In any case, I’ll continue to try out your suggestions. Unfortunately, I can’t use Cursor here. I’ll let you know if I find a setup that works well.

Thank you all for your great work!

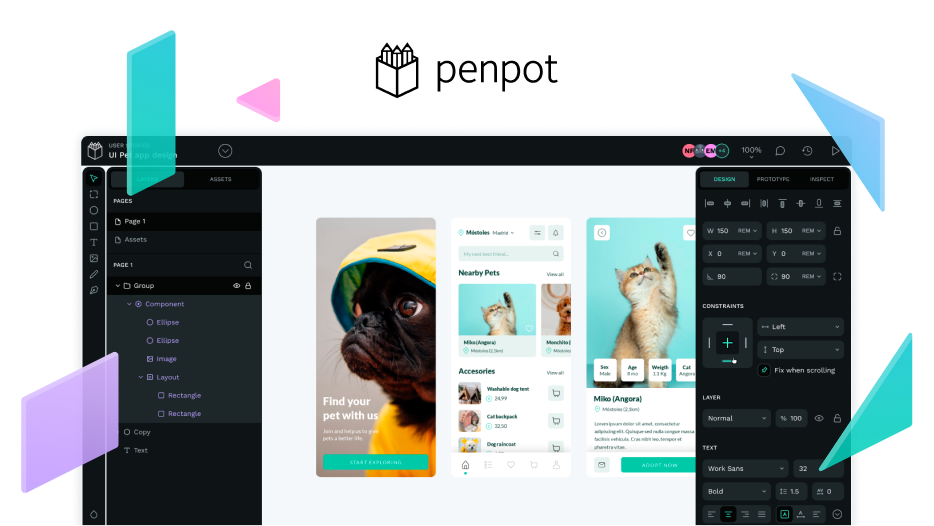

Here are examples of my first attempts. I mainly tried to create designs in Penpot using components from the library or to generate Penpot designs and components based on finished source code + reference image.

Cards

Card using components from the library